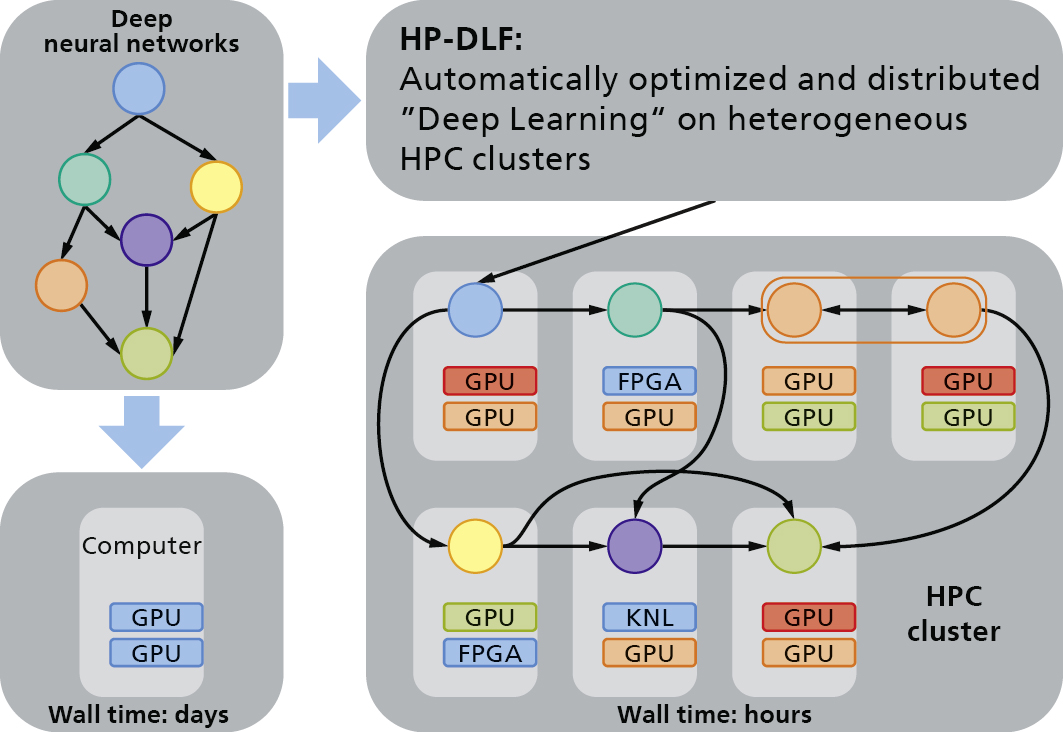

The goal of the BMBF project »High Performance Deep Learning Framework« (HP-DLF) is to provide researchers and developers in the »Deep Learning« domain an easy access to current and future high-performance computing systems.

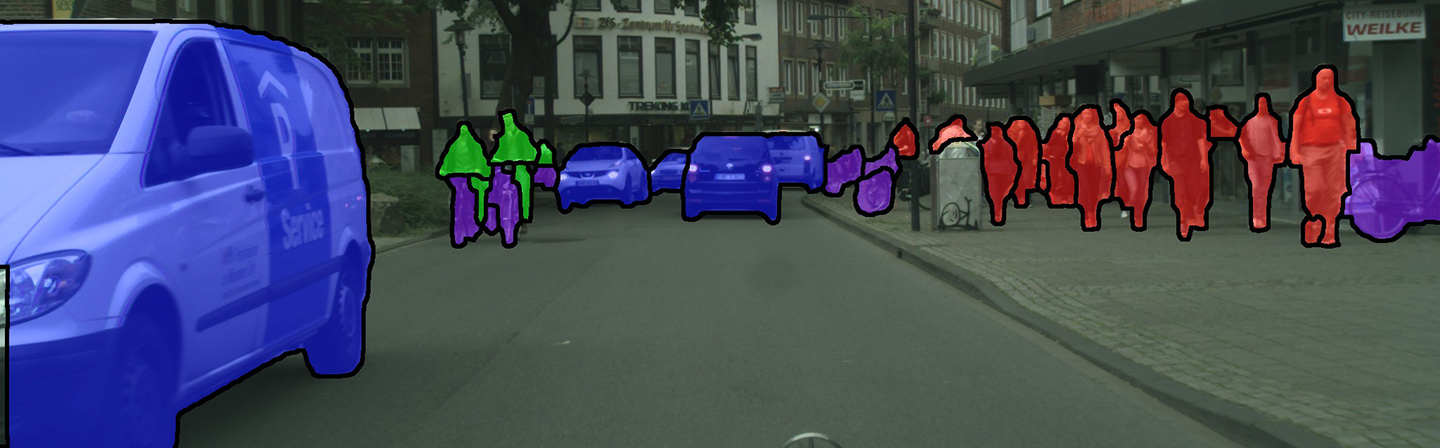

How does an autonomously driving car recognize pedestrians and other road users? How does speech recognition for everyday use work? How does the Internet search engine recognize people in photos? The answer is: with machine learning algorithms. In recent years considerable progress has been made in the field of machine learning. A significant part of this success is due to the further development of so-called »Deep Learning« algorithms.

In the course of this development, larger and more complex artificial neural networks are being designed and trained. However, this procedure, which has been successful for many practical applications, requires enormous computing effort and a great deal of training data. Therefore, the further development of »Deep Learning« depends on the development of methods and infrastructures that will ensure the predictability of increasingly complex neural networks in the future.

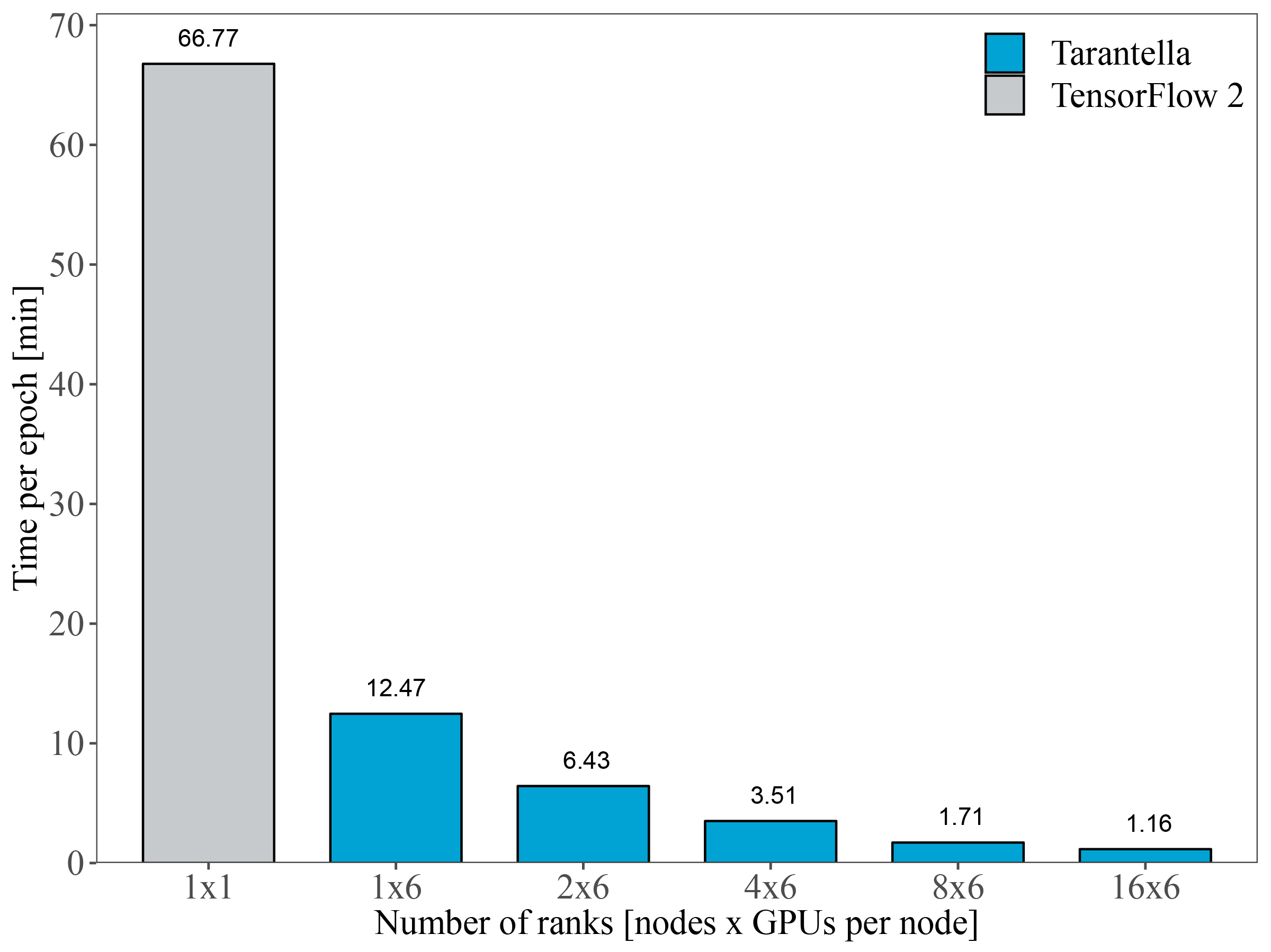

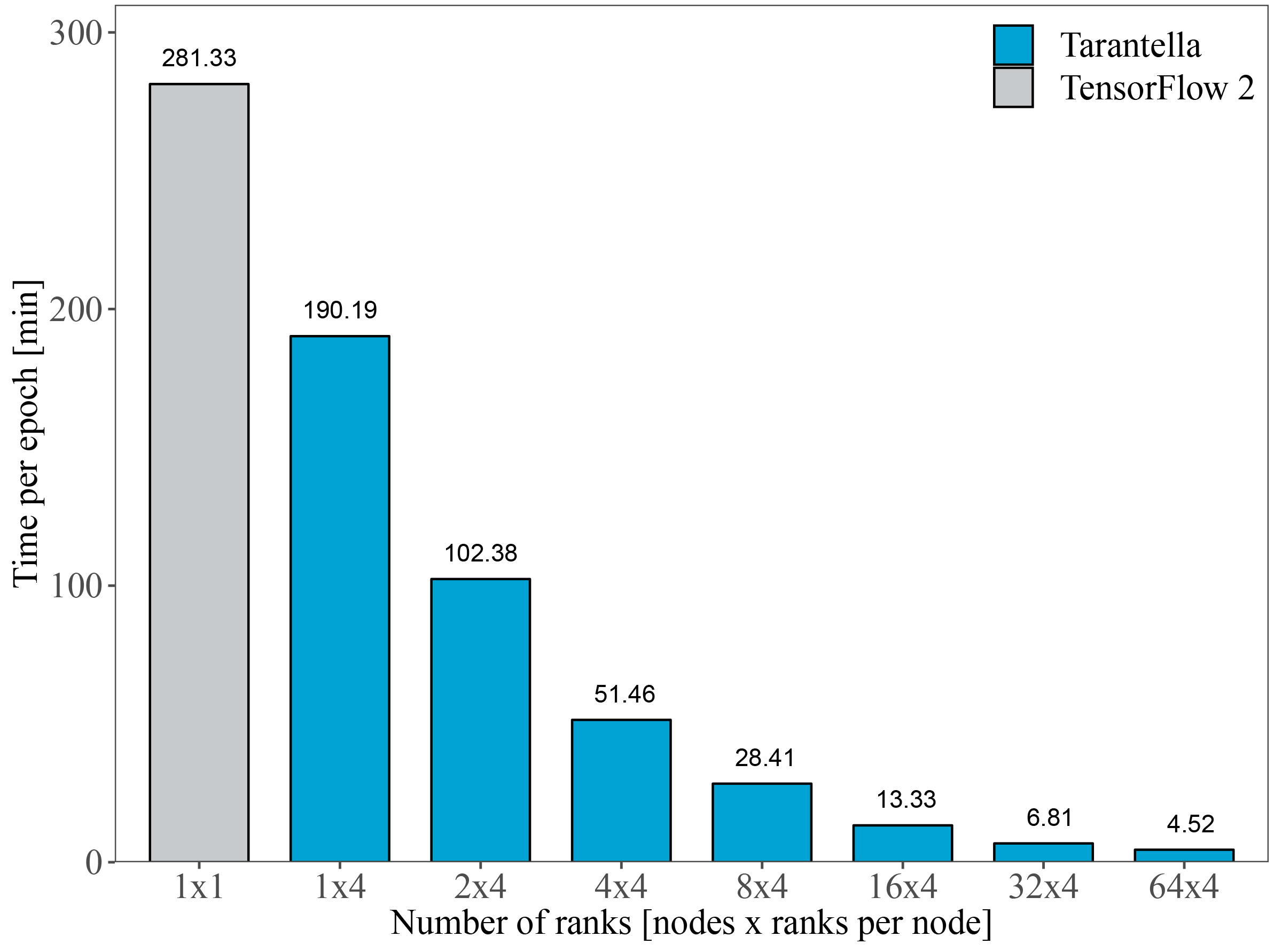

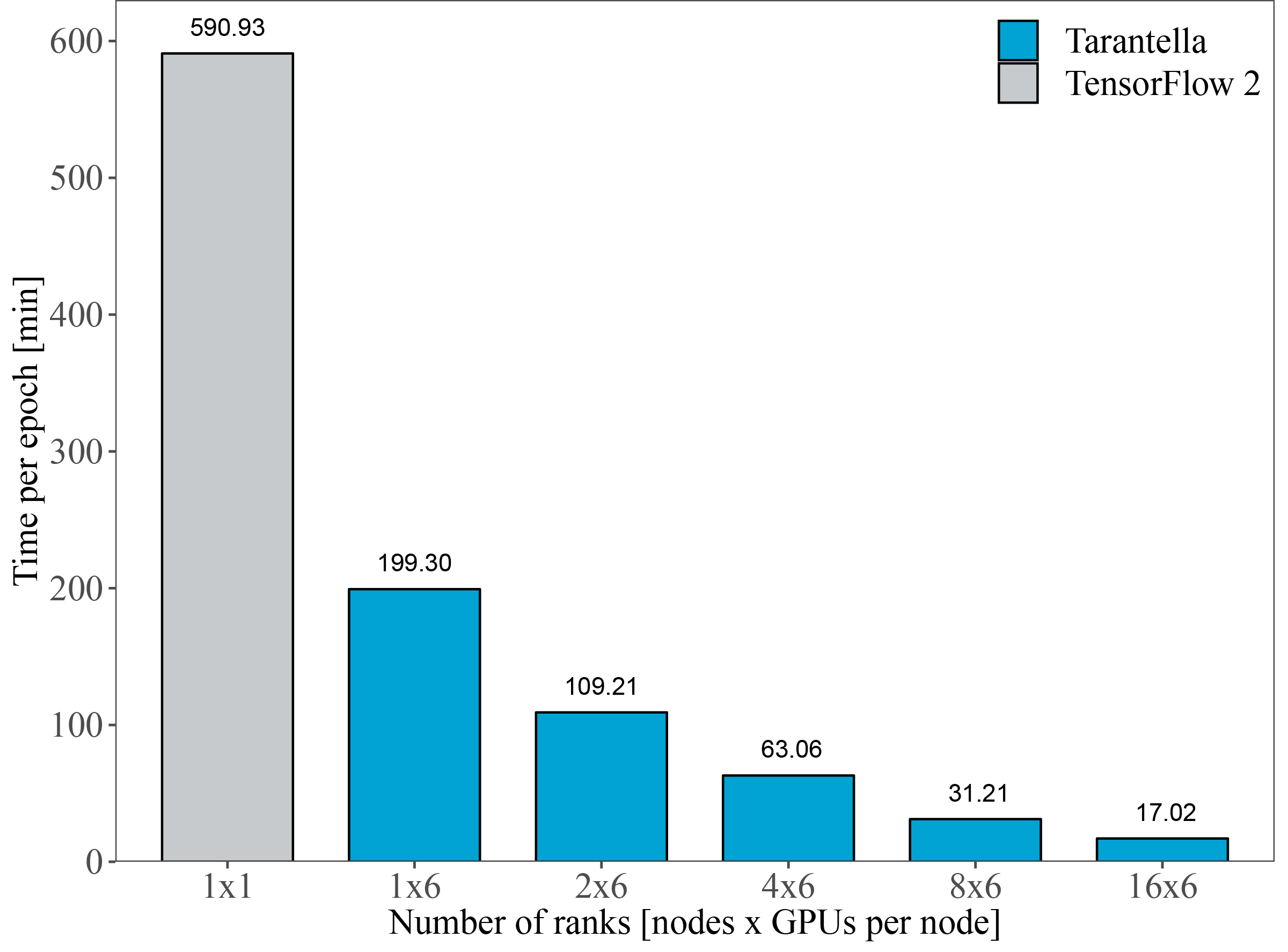

Within HP-DLF we have developed the open-source framework Tarantella, which is based on state-of-the-art technologies from both deep learning and HPC and enables the scalable training of deep neural networks on supercomputers.